For the opening of the academic year, research group The Algorithmic Gaze (Sint Lucas Antwerp, KdG) organized an experiment in which elementary school children were filmed and questioned about their interests and dreams. The images were then processed by an AI algorithm and synthesized into new images, new faces, the new generation.

Project movie (in Dutch):

Method

After recording all faces, we used Figment with Google's MediaPipe to extract the face mesh from the video recordings.

We used NVIDIA's pix2pixHD algorithm, a high-quality conditional GAN to learn the mapping between segmented face masks and the recorded video footage.

We performed extensive testing and re-training, checking difficult conditions (e.g. side-facing, looking up, blinking) and the limitations of the segmentation algorithm. We discovered that there is a "sweet spot"; placing your face too close or too far would introduce distortions.

Through a custom-built app, we could control the algorithm interactively via webcam:

We invited the elementary school students back to our campus to present the results of the training, allowing them to experience and play with the model through the webcam. They discovered they could find their own likeness in the model, but also that of their friends:

A production movie of the project was presented during the academic opening, on Thursday, September 29nd, in the Stadsschouwburg Antwerpen.

Credits

- Isabelle De Ridder (University of Antwerp) — project lead

- Frederik De Bleser, Lieven Menschaert — machine learning and development

- Mathias Mallentjer, Brent Meynen (Production Office) — general production

- Alexandra Fraser (Sint Lucas Antwerpen) — feedback and interviews

- Ine Vanoeveren, Imane Benyecif — testing and support

Other Collaborations

100 Days

Destroying and reconstructing memories

Assume calibration pose: this breath isn’t mine

A Haptic Feedback Exploration

Cyber Sensuality

Training an AI Dancer through PIX2PIX

Does AI Dream of Gender?

Gender Fluid AI Installation

Flesh to Foliage

A Technological Requiem

Hunger of the Pine

AI artwork around natural sustainability

Maureen

A critical mirror on AI in our society

Memories of Care

Martina Menegon research visit July 4-6 2023

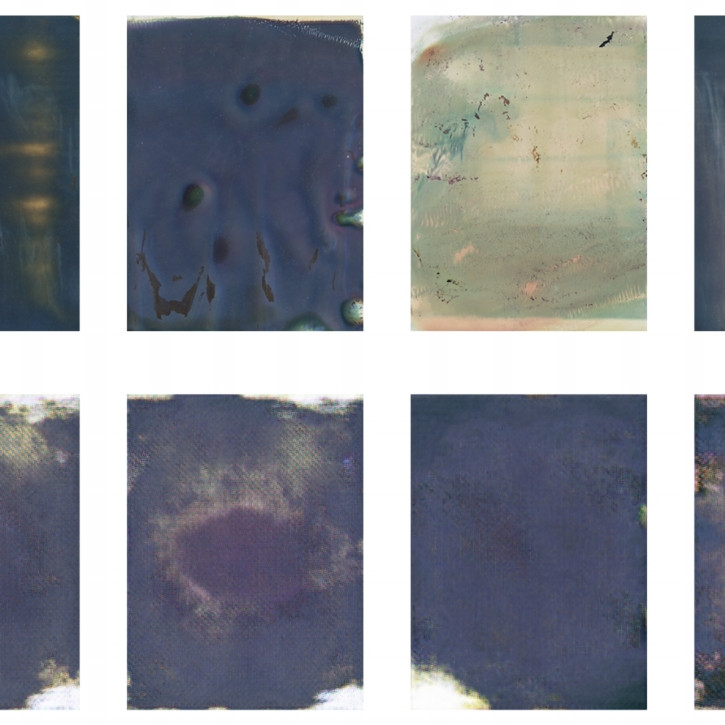

Polaroid Memories

Re-discovering found footage through GANDelve

Workshops

Teaching our tools to students